Memory Management (Operating System)

Key concepts of memory management in operating systems

Photo by Claudio Schwarz on Unsplash

External Fragmentation

There is a problem encountered in Main Memory (RAM) when the memory used by some processes are left behind in non-contiguous manner. This creates a problem when new process wants to come inside the memory, total space of available holes are enough to accommodate the process but the holes are available in non-contiguous fashion. Before jumping into how are these situations' occur we need to know about the memory allocation techniques.

In variable-size partitioning, the main memory is not divided but the process that arrives is allocated according to first-fit, best-fit or worst-fit strategies. First-fit is the memory allocation technique where a process is inserted into the first hole large enough to accommodate the process. Best-fit is another technique where a process is inserted into the smallest hole large enough to accommodate the process. Worst-fit is a technique where a process is inserted into the largest hole of all the available holes, large enough to accommodate the process. All these things can result in external fragmentation.

Fixed Size Partitioning

One possible solution to external fragmentation is to have fixed size partitioning. But, in fixed size partitioning of the main memory, internal fragmentation is the main problem. By fixed size partitioning I mean the memory is divided into several partitions. For example, if total size of the main memory is 4096KB, then we can divide the memory into 4 different partitions each partition of size 1024KB. Again, the point to remember is that even the number of partitions remains constant, the size of each partition may or may not be similar.

In the figure above we can observe the internal fragmentation as well as external fragmentation. To calculate the internal fragmentation we can use simple equation,

\(InternalFragmentation = \sum_1^n Fragmentation(n)\)

\(InternalFragmentation = (4-1) + (8-7)+ (8-7)+ (16-14) = 7MB\)

If a new process P5 of size 7MB arrives, the processes cannot be accommodated in the RAM because the holes are not contiguous, hence this 7MB would become part of external fragmentation.

One possible solution to the External Fragmentation is Compaction. This means to move all the available holes(free space) to one end of the memory, so that the new processes can find the contiguous space.

Paging

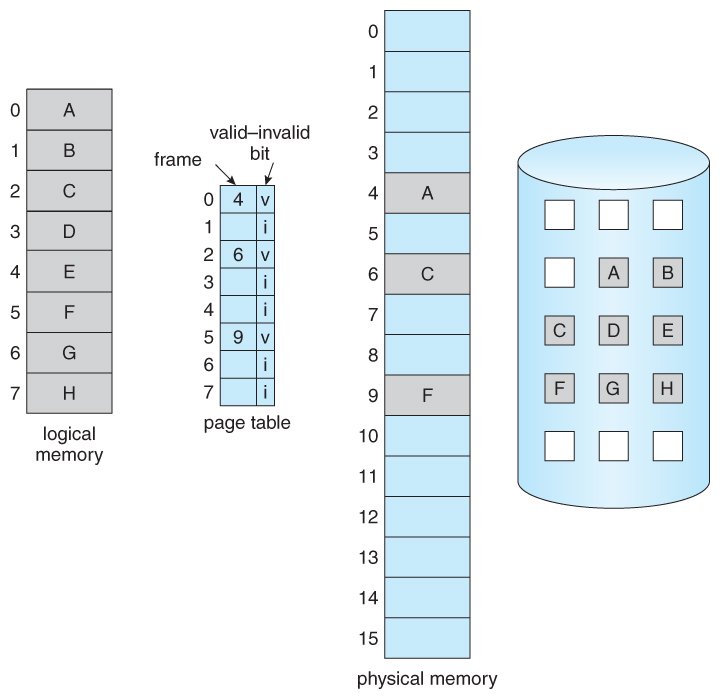

Another solution to avoid External Fragmentation is called Paging. In this method, we divide the process into pages of equal size. The main memory is also divided into frames of equal size. Here, the page size is equal to the frame size. Let us consider an example to understand the concept of paging.

Example

Suppose we have the following table. Our job is to calculate the remaining variables and explain the paging process in this scenario.

| Variable | Size |

| Logical Address Space(LAS) | 4GB |

| Physical Address Space(PAS) | 65MB |

| Page Size | 4KB |

| No. of Pages | ? |

| No. of Frames | ? |

| No. of entries in Page Table | ? |

| Size of page table | ? |

The 4GB logical address space can be represented as \(4*1024*1024*1024\) bytes. This means, we have total number of \(4,294,967,296\) addresses. Now, we can represent 1024 as \(2^{10}\). This means, the address space can be represented as \(2^{32}\)bytes. Hence, we need 32 bits for each address. The logical address is again represented as Page Size and Page Offset. Similarly, the page size can be represented as \(4*1024\) bytes, which can be represented as \(2^{12}\) bytes. Hence, out of 32 bits, 12 bits is used as page size/offset and remaining 20 bits is used as page number. Similarly, 65MB physical address space can be represented as \(64*1024*1024\) bytes. Now, in the same way we can represent this number as \(2^6*2^{10}*2^{10}\) bytes. Therefore, the physical address space can be represented using 26 bytes, where 12 bits is used as frame size/frame offset and remaining 14 bits us used as frame number.

To calculate the No. of Pages,

\(No. of Pages = LAS/PageSize = 4GB/4KB = 1048576 = 2^{20}\)

To calculate the No. of Frames,

\(No. of Frames = PAS/PageSize = 65MB/4KB = 16640 = 2^{14}\)

To calculate No. of entries in Page Table,

\(No.ofentriesinPageTable = No.ofpagesinaprocess\)

To calculate No. of entries in Page Table,

\(SizeofpageTable = Number of pages * Size of a page = 2^{20}*14bits\)

Page Table is used by MMU to map logical address to physical address. Page Table not only consists of frame number but also some optional fields such as Valid/Invalid, Protection, Reference, Caching, Dirty. The valid/invalid flag determines if the page exists(1) or not(0). Protection is used by operating system to determine the permissions read, write and execute the data present in the page. Reference is used to determine if the requested page is requested for the first time or not. Caching is used to enable and disable the cache for the page. Finally, the Dirty bit is used to signify that the data is modified in the main memory but not in the hard disk.

Multilevel Paging

It is obvious that page table must be stored somewhere inside computer so that Main Memory can make use of it for mapping purposes. Yes, it is stored inside a frame in the main memory. But, when the size of the page table exceeds the frame size, the problem arises.

Example

Suppose we have following quantities.

| Variables | Size |

| Physical Address Space | 256MB |

| Logical Address Space | 4GB |

| Frame Size | 4KB |

| Page Table Entry | 2B/16bits |

We need to calculate the size of page table. The number of pages in a page table can be calculated as,

\(No.ofPages = LAS/FrameSize = 4GB/4KB = 2^{20}\)

\(PAS = 256*1024*1024=2^{8}*2^{10}*2^{10} = 2^{28}\)

\(FrameSize = 4KB = 4*1024 = 2^{12} bytes\), which implies that 16 bits can be used to represent a frame in physical address space.

\(SizeofPageTable = 2^{20}*2B = 2MB\)

Hence, the size of page table is larger than the frame size.

You might have remembered that we divided process into pages as well as main memory into frames. But, we only consider the size of a process & size of a page. Now, that we know the problem we also must consider the size of a page table and divide the page table.

\(NewNumberofPages=2MB/4KB=512\)

\(NewSizeofPageTable=512*2B=1024B=1KB\)

\(NumberofPages(InnerPT) = 2^{20}/512 = 2^{11}\)

\(SizeofPageTable(InnerPT) = 2^{11}*2B=4KB\)

Now that we have gathered all the new requirements we shall proceed to know how CPU makes use of inner and outer page table.

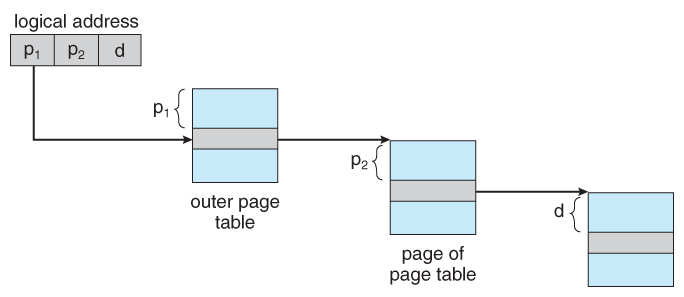

Previously, the logical address would only have 2 entries i.e. page number and page offset. Now that we have two page tables we would have entries \(p_1\) for outer page table and \(p_2\) for inner page table as shown in the figure. The \(d\) represents the page offset.

In our case, \(p_1\) has 9 bits i.e. 512 number of entries in outer page table. Each entry in outer page table corresponds to \(2^{11}\) pages each of size 4KB in inner page table. So, \(p_2\) represents a particular page of size 4KB. The \(d\) represents the page offset from the starting address in the inner page table. The inner page table and offset together will give the actual frame number corresponding to the page number and page offset.

Thrashing

Thrashing is related to degree of multiprogramming. The degree of multiprogramming is the measure of number of processes in the main memory. If we were to increase the number of process in the memory the chances of I/O operation requests becomes higher and they are forced to wait/block. During this time, CPU becomes idle but our goal is to make CPU busy as much as we can so that the throughput is increased. To achieve the degree of multiprogramming we can transfer small portion of pages for every processes, lets say 10%. But, in the worst case there might be continuous page faults which again leads to minimum CPU utilization. This is known as thrashing.

Segmentation

Segmentation is the process where we divide the process into segments, where each segment contains related data. So, what is the main difference between paging and segmentation? Paging simply divides the process or programs into fixed size pages without knowing what's inside it. However, segmentation works on user point of view. In segmentation, every segment has variable size, some smaller some larger. To convert the logical address to physical address MMU uses segment table. With the help of segment table we can retrieve the segment from the main memory using the CPU generated logical address.

The logical address is composed of segment number and segment size/offset. Let's say \(S\) is the number of bits representing segment number and \(d\) is the number of bits represents segment size.

Example

Now, let us take an example of segmentation to further clarify the process. Let the segment number and segment offset/size can be represented using 16 bits. Hence the logical address for Segment 1 can be represented as,

| 001 | 0000110010000 |

The CPU requests for Segment 1 provided the segment size. First, the segment table is looked up for base address of Segment 1. Therefore, Base value 6300 is found in the second row & second column. The Segment 1 is then found in the main memory at the 6300th location. The segment size corresponding to the logical address is then compared to limit entry of the Segment 1. In this case d \(<=\) limit, so we retrieve the whole Segment 1 for CPU to process.

Virtual Memory

One way to think about the virtual memory is that, "Do we have to load the whole process to the main memory before the execution?". The answer is no. All the strategies so far have the same goal: to keep many processes in memory simultaneously to allow multiprogramming. Despite that the entire process must be in memory before it can execute. However, the concept of virtual memory allows CPU to execute processes that are not completely in the main memory. If you think about it as a program, it does not always require all the entire process for execution. Programs often have error handling conditions which seldom gets executed. Arrays/lists are often allocated large memory than they might need. Even in the cases where the entire program is needed it may not all be needed at the same time.

One major advantage of this is that process/programs can be larger than the main memory. Further, virtual memory abstracts main memory into an extremely large, uniform array of storage, separating logical memory as viewed by the programmer from physical memory. Similarly, less I/O operations to swap pages into memory, allows each program would run faster.

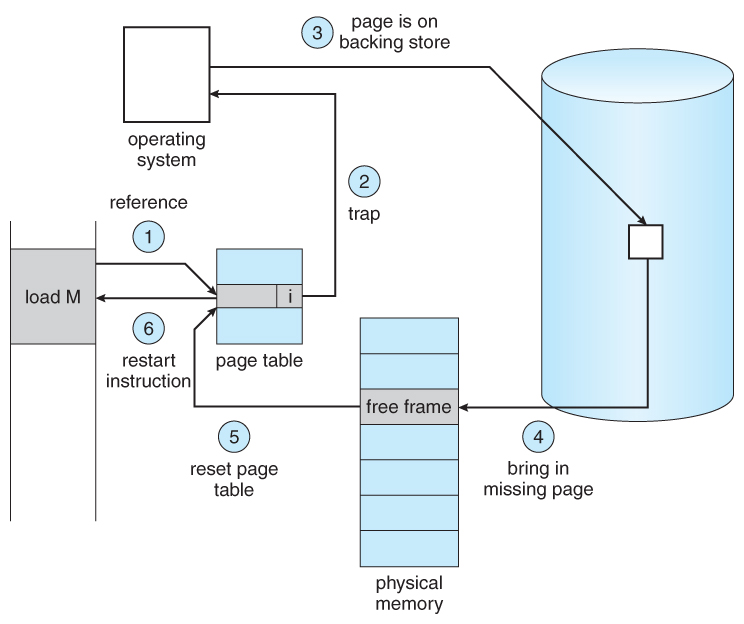

This technique to load pages only as they are needed is called demand paging. Pages that are never accessed are thus never loaded into physical memory. The figure clearly explains the demand paging. The secondary storage contains all the pages of various processes. The valid/invalid bit is used in page table to indicate whether the page is present in the main memory or not. Access to these pages causes a page fault. The paging hardware in translating the address notice the invalid bit, causing a trap to the operating system.

The entire process from page request to page handling is described below.

To determine if the page request is valid, internal table is checked.

If the reference/request is invalid, process is terminated. If it is a valid request but the page is not brought into memory we page it in.

We find a free frame.

We schedule a secondary storage operation to read the desired page into the newly allocated frame.

When the storage read operation is complete, the internal table is modified to indicate the page is in the memory.

We restart the instruction that was interrupted by the trap. The process can now access the page as though it had always been in the memory.

Despite the interruption due to page fault, the state of registers, instruction pointer is stored. So, one important requirement for a demand paging system is the ability to restart any instruction after a page fault.

Page Replacement

Main memory always has a limited space. The number of pages stored can vastly outnumber the number of frames available. So, to accommodate this limitation we use the page replacement algorithm. If our main memory is never fully reserved and can accept as many pages as available then we do not have any problem. But, in the case where we have limited space we need to use one of the page replacement algorithms.

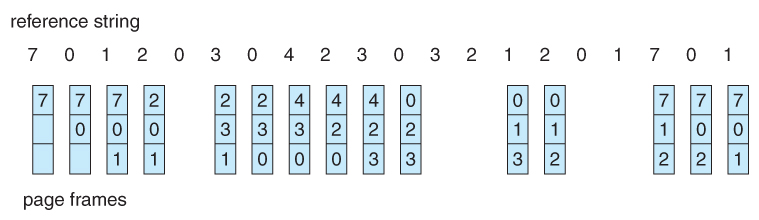

FIFO(First-in First-out): The reference string are the pages requested by the CPU. If any of the pages is not found in the memory, page fault occurs and the page is swapped in from the secondary memory.

In the above example, page 7, page 0 and page 1 are swapped in and inserted into the free frames. Thereafter, the frames available are reserved so we need to use FIFO page replacement technique where the page first inserted is the one that gets replaced with the incoming page. Therefore, page 2 gets replaced with page 7, page 0 is already available so this is the case of page hit. Similarly, page 0 gets replaced with page 3 and so on.

Optimal Page Replacement: In this technique, we simply replace the page that will not be used for the longest period of time.

In the example, when all the frames are occupied, page 7 is the one that will not be used for the longest period of time. Then in the next request, page 0 is already available. When the request for page 3 arrives, page 1 is replaced because among page 2, page 0 and page 1, it is page 1 that will not be used for the longest period of time. The algorithm looks forward in time whereas FIFO looks backward in time.

LRU(Least Recently Used): LRU replacement algorithm associates with each page the time of that page's last use. When a page must be replaced, LRU chooses the page that has not been used for the longest period of time. We can think of this strategy as the optimal page replacement algorithm looking backwards in time.

In the above example, page 7 at step 4 is replaced with page 2 because it is the least recently used with respect to page 0 and page 1. Similarly, page 1 at step 6 is replaced with page 3 because it is least recently used page with respect to page 2 and page 0. The rest of the steps follow the same technique.

This article addresses memory allocation issues, focusing on external and internal fragmentation and solutions like fixed-size partitioning. It explores advanced techniques such as paging and segmentation to improve memory management. Additionally, it discusses virtual memory and demand paging to allow execution of processes larger than main memory. Thrashing and page replacement algorithms like FIFO, Optimal, and LRU are also covered to optimize memory usage and CPU utilization.